Upload folder using huggingface_hub

Browse files- .gitattributes +1 -0

- README.md +164 -0

- model_index.json +29 -0

- processor/added_tokens.json +24 -0

- processor/chat_template.jinja +7 -0

- processor/merges.txt +0 -0

- processor/preprocessor_config.json +37 -0

- processor/special_tokens_map.json +31 -0

- processor/tokenizer.json +3 -0

- processor/tokenizer_config.json +208 -0

- processor/video_preprocessor_config.json +44 -0

- processor/vocab.json +0 -0

- quantization_info.json +6 -0

- scheduler/scheduler_config.json +18 -0

- text_encoder/config.json +181 -0

- text_encoder/generation_config.json +14 -0

- text_encoder/model-00001-of-00002.safetensors +3 -0

- text_encoder/model-00002-of-00002.safetensors +3 -0

- text_encoder/model.safetensors.index.json +0 -0

- tokenizer/added_tokens.json +24 -0

- tokenizer/chat_template.jinja +54 -0

- tokenizer/merges.txt +0 -0

- tokenizer/special_tokens_map.json +31 -0

- tokenizer/tokenizer_config.json +207 -0

- tokenizer/vocab.json +0 -0

- transformer/config.json +64 -0

- transformer/diffusion_pytorch_model-00001-of-00002.safetensors +3 -0

- transformer/diffusion_pytorch_model-00002-of-00002.safetensors +3 -0

- transformer/diffusion_pytorch_model.safetensors.index.json +0 -0

- vae/config.json +103 -0

- vae/diffusion_pytorch_model.safetensors +3 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,4 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

processor/tokenizer.json filter=lfs diff=lfs merge=lfs -text

|

README.md

ADDED

|

@@ -0,0 +1,164 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

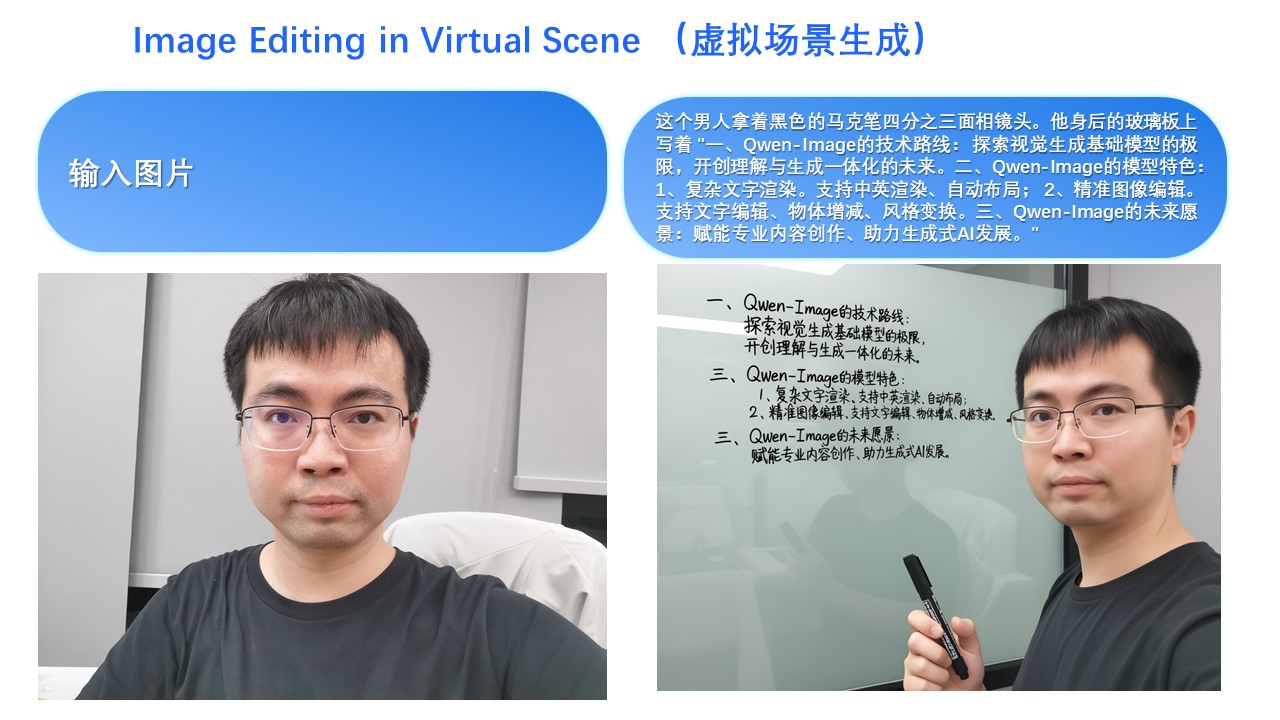

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

license: apache-2.0

|

| 3 |

+

language:

|

| 4 |

+

- en

|

| 5 |

+

- zh

|

| 6 |

+

library_name: diffusers

|

| 7 |

+

pipeline_tag: image-to-image

|

| 8 |

+

---

|

| 9 |

+

<p align="center">

|

| 10 |

+

<img src="https://qianwen-res.oss-cn-beijing.aliyuncs.com/Qwen-Image/qwen_image_edit_logo.png" width="400"/>

|

| 11 |

+

<p>

|

| 12 |

+

<p align="center">

|

| 13 |

+

💜 <a href="https://chat.qwen.ai/"><b>Qwen Chat</b></a>   |   🤗 <a href="https://huggingface.co/Qwen/Qwen-Image-Edit-2509">Hugging Face</a>   |   🤖 <a href="https://modelscope.cn/models/Qwen/Qwen-Image-Edit-2509">ModelScope</a>   |    📑 <a href="https://qianwen-res.oss-cn-beijing.aliyuncs.com/Qwen-Image/Qwen_Image.pdf">Tech Report</a>    |    📑 <a href="https://qwenlm.github.io/blog/qwen-image-edit/">Blog</a>

|

| 14 |

+

<br>

|

| 15 |

+

🖥️ <a href="https://huggingface.co/spaces/Qwen/Qwen-Image-Edit">Demo</a>   |   💬 <a href="https://github.com/QwenLM/Qwen-Image/blob/main/assets/wechat.png">WeChat (微信)</a>   |   🫨 <a href="https://discord.gg/CV4E9rpNSD">Discord</a>  |    <a href="https://github.com/QwenLM/Qwen-Image">Github</a>

|

| 16 |

+

</p>

|

| 17 |

+

|

| 18 |

+

<p align="center">

|

| 19 |

+

<img src="https://qianwen-res.oss-accelerate-overseas.aliyuncs.com/Qwen-Image/edit2509/edit2509_top.jpg" width="1600"/>

|

| 20 |

+

<p>

|

| 21 |

+

|

| 22 |

+

|

| 23 |

+

# Introduction

|

| 24 |

+

This September, we are pleased to introduce Qwen-Image-Edit-2509, the monthly iteration of Qwen-Image-Edit. To experience the latest model, please visit [Qwen Chat](https://qwen.ai) and select the "Image Editing" feature.

|

| 25 |

+

Compared with Qwen-Image-Edit released in August, the main improvements of Qwen-Image-Edit-2509 include:

|

| 26 |

+

* **Multi-image Editing Support**: For multi-image inputs, Qwen-Image-Edit-2509 builds upon the Qwen-Image-Edit architecture and is further trained via image concatenation to enable multi-image editing. It supports various combinations such as "person + person," "person + product," and "person + scene." Optimal performance is currently achieved with 1 to 3 input images.

|

| 27 |

+

* **Enhanced Single-image Consistency**: For single-image inputs, Qwen-Image-Edit-2509 significantly improves editing consistency, specifically in the following areas:

|

| 28 |

+

- **Improved Person Editing Consistency**: Better preservation of facial identity, supporting various portrait styles and pose transformations;

|

| 29 |

+

- **Improved Product Editing Consistency**: Better preservation of product identity, supporting product poster editing;

|

| 30 |

+

- **Improved Text Editing Consistency**: In addition to modifying text content, it also supports editing text fonts, colors, and materials;

|

| 31 |

+

* **Native Support for ControlNet**: Including depth maps, edge maps, keypoint maps, and more.

|

| 32 |

+

|

| 33 |

+

|

| 34 |

+

## Quick Start

|

| 35 |

+

|

| 36 |

+

Install the latest version of diffusers

|

| 37 |

+

```

|

| 38 |

+

pip install git+https://github.com/huggingface/diffusers

|

| 39 |

+

```

|

| 40 |

+

|

| 41 |

+

The following contains a code snippet illustrating how to use `Qwen-Image-Edit-2509`:

|

| 42 |

+

|

| 43 |

+

```python

|

| 44 |

+

import os

|

| 45 |

+

import torch

|

| 46 |

+

from PIL import Image

|

| 47 |

+

from diffusers import QwenImageEditPlusPipeline

|

| 48 |

+

|

| 49 |

+

pipeline = QwenImageEditPlusPipeline.from_pretrained("Qwen/Qwen-Image-Edit-2509", torch_dtype=torch.bfloat16)

|

| 50 |

+

print("pipeline loaded")

|

| 51 |

+

|

| 52 |

+

pipeline.to('cuda')

|

| 53 |

+

pipeline.set_progress_bar_config(disable=None)

|

| 54 |

+

image1 = Image.open("input1.png")

|

| 55 |

+

image2 = Image.open("input2.png")

|

| 56 |

+

prompt = "The magician bear is on the left, the alchemist bear is on the right, facing each other in the central park square."

|

| 57 |

+

inputs = {

|

| 58 |

+

"image": [image1, image2],

|

| 59 |

+

"prompt": prompt,

|

| 60 |

+

"generator": torch.manual_seed(0),

|

| 61 |

+

"true_cfg_scale": 4.0,

|

| 62 |

+

"negative_prompt": " ",

|

| 63 |

+

"num_inference_steps": 40,

|

| 64 |

+

"guidance_scale": 1.0,

|

| 65 |

+

"num_images_per_prompt": 1,

|

| 66 |

+

}

|

| 67 |

+

with torch.inference_mode():

|

| 68 |

+

output = pipeline(**inputs)

|

| 69 |

+

output_image = output.images[0]

|

| 70 |

+

output_image.save("output_image_edit_plus.png")

|

| 71 |

+

print("image saved at", os.path.abspath("output_image_edit_plus.png"))

|

| 72 |

+

|

| 73 |

+

```

|

| 74 |

+

|

| 75 |

+

## Showcase

|

| 76 |

+

|

| 77 |

+

**The primary update in Qwen-Image-Edit-2509 is support for multi-image inputs.**

|

| 78 |

+

|

| 79 |

+

Let’s first look at a "person + person" example:

|

| 80 |

+

|

| 81 |

+

|

| 82 |

+

Here is a "person + scene" example:

|

| 83 |

+

|

| 84 |

+

|

| 85 |

+

Below is a "person + object" example:

|

| 86 |

+

|

| 87 |

+

|

| 88 |

+

In fact, multi-image input also supports commonly used ControlNet keypoint maps—for example, changing a person’s pose:

|

| 89 |

+

|

| 90 |

+

|

| 91 |

+

Similarly, the following examples demonstrate results using three input images:

|

| 92 |

+

|

| 93 |

+

|

| 94 |

+

|

| 95 |

+

|

| 96 |

+

---

|

| 97 |

+

|

| 98 |

+

**Another major update in Qwen-Image-Edit-2509 is enhanced consistency.**

|

| 99 |

+

|

| 100 |

+

First, regarding person consistency, Qwen-Image-Edit-2509 shows significant improvement over Qwen-Image-Edit. Below are examples generating various portrait styles:

|

| 101 |

+

|

| 102 |

+

|

| 103 |

+

For instance, changing a person’s pose while maintaining excellent identity consistency:

|

| 104 |

+

|

| 105 |

+

|

| 106 |

+

Leveraging this improvement along with Qwen-Image’s unique text rendering capability, we find that Qwen-Image-Edit-2509 excels at creating meme images:

|

| 107 |

+

|

| 108 |

+

|

| 109 |

+

Of course, even with longer text, Qwen-Image-Edit-2509 can still render it while preserving the person’s identity:

|

| 110 |

+

|

| 111 |

+

|

| 112 |

+

Person consistency is also evident in old photo restoration. Below are two examples:

|

| 113 |

+

|

| 114 |

+

|

| 115 |

+

|

| 116 |

+

Naturally, besides real people, generating cartoon characters and cultural creations is also possible:

|

| 117 |

+

|

| 118 |

+

|

| 119 |

+

Second, Qwen-Image-Edit-2509 specifically enhances product consistency. We find that the model can naturally generate product posters from plain-background product images:

|

| 120 |

+

|

| 121 |

+

|

| 122 |

+

Or even simple logos:

|

| 123 |

+

|

| 124 |

+

|

| 125 |

+

Third, Qwen-Image-Edit-2509 specifically enhances text consistency and supports editing font type, font color, and font material:

|

| 126 |

+

|

| 127 |

+

|

| 128 |

+

|

| 129 |

+

|

| 130 |

+

Moreover, the ability for precise text editing has been significantly enhanced:

|

| 131 |

+

|

| 132 |

+

|

| 133 |

+

|

| 134 |

+

It is worth noting that text editing can often be seamlessly integrated with image editing—for example, in this poster editing case:

|

| 135 |

+

|

| 136 |

+

|

| 137 |

+

---

|

| 138 |

+

|

| 139 |

+

**The final update in Qwen-Image-Edit-2509 is native support for commonly used ControlNet image conditions, such as keypoint control and sketches:**

|

| 140 |

+

|

| 141 |

+

|

| 142 |

+

|

| 143 |

+

|

| 144 |

+

|

| 145 |

+

|

| 146 |

+

## License Agreement

|

| 147 |

+

|

| 148 |

+

Qwen-Image is licensed under Apache 2.0.

|

| 149 |

+

|

| 150 |

+

## Citation

|

| 151 |

+

|

| 152 |

+

We kindly encourage citation of our work if you find it useful.

|

| 153 |

+

|

| 154 |

+

```bibtex

|

| 155 |

+

@misc{wu2025qwenimagetechnicalreport,

|

| 156 |

+

title={Qwen-Image Technical Report},

|

| 157 |

+

author={Chenfei Wu and Jiahao Li and Jingren Zhou and Junyang Lin and Kaiyuan Gao and Kun Yan and Sheng-ming Yin and Shuai Bai and Xiao Xu and Yilei Chen and Yuxiang Chen and Zecheng Tang and Zekai Zhang and Zhengyi Wang and An Yang and Bowen Yu and Chen Cheng and Dayiheng Liu and Deqing Li and Hang Zhang and Hao Meng and Hu Wei and Jingyuan Ni and Kai Chen and Kuan Cao and Liang Peng and Lin Qu and Minggang Wu and Peng Wang and Shuting Yu and Tingkun Wen and Wensen Feng and Xiaoxiao Xu and Yi Wang and Yichang Zhang and Yongqiang Zhu and Yujia Wu and Yuxuan Cai and Zenan Liu},

|

| 158 |

+

year={2025},

|

| 159 |

+

eprint={2508.02324},

|

| 160 |

+

archivePrefix={arXiv},

|

| 161 |

+

primaryClass={cs.CV},

|

| 162 |

+

url={https://arxiv.org/abs/2508.02324},

|

| 163 |

+

}

|

| 164 |

+

```

|

model_index.json

ADDED

|

@@ -0,0 +1,29 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"_class_name": "QwenImageEditPlusPipeline",

|

| 3 |

+

"_diffusers_version": "0.36.0.dev0",

|

| 4 |

+

"_name_or_path": "./tmp_model",

|

| 5 |

+

"processor": [

|

| 6 |

+

"transformers",

|

| 7 |

+

"Qwen2VLProcessor"

|

| 8 |

+

],

|

| 9 |

+

"scheduler": [

|

| 10 |

+

"diffusers",

|

| 11 |

+

"FlowMatchEulerDiscreteScheduler"

|

| 12 |

+

],

|

| 13 |

+

"text_encoder": [

|

| 14 |

+

"transformers",

|

| 15 |

+

"Qwen2_5_VLForConditionalGeneration"

|

| 16 |

+

],

|

| 17 |

+

"tokenizer": [

|

| 18 |

+

"transformers",

|

| 19 |

+

"Qwen2Tokenizer"

|

| 20 |

+

],

|

| 21 |

+

"transformer": [

|

| 22 |

+

"diffusers",

|

| 23 |

+

"QwenImageTransformer2DModel"

|

| 24 |

+

],

|

| 25 |

+

"vae": [

|

| 26 |

+

"diffusers",

|

| 27 |

+

"AutoencoderKLQwenImage"

|

| 28 |

+

]

|

| 29 |

+

}

|

processor/added_tokens.json

ADDED

|

@@ -0,0 +1,24 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"</tool_call>": 151658,

|

| 3 |

+

"<tool_call>": 151657,

|

| 4 |

+

"<|box_end|>": 151649,

|

| 5 |

+

"<|box_start|>": 151648,

|

| 6 |

+

"<|endoftext|>": 151643,

|

| 7 |

+

"<|file_sep|>": 151664,

|

| 8 |

+

"<|fim_middle|>": 151660,

|

| 9 |

+

"<|fim_pad|>": 151662,

|

| 10 |

+

"<|fim_prefix|>": 151659,

|

| 11 |

+

"<|fim_suffix|>": 151661,

|

| 12 |

+

"<|im_end|>": 151645,

|

| 13 |

+

"<|im_start|>": 151644,

|

| 14 |

+

"<|image_pad|>": 151655,

|

| 15 |

+

"<|object_ref_end|>": 151647,

|

| 16 |

+

"<|object_ref_start|>": 151646,

|

| 17 |

+

"<|quad_end|>": 151651,

|

| 18 |

+

"<|quad_start|>": 151650,

|

| 19 |

+

"<|repo_name|>": 151663,

|

| 20 |

+

"<|video_pad|>": 151656,

|

| 21 |

+

"<|vision_end|>": 151653,

|

| 22 |

+

"<|vision_pad|>": 151654,

|

| 23 |

+

"<|vision_start|>": 151652

|

| 24 |

+

}

|

processor/chat_template.jinja

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{% set image_count = namespace(value=0) %}{% set video_count = namespace(value=0) %}{% for message in messages %}{% if loop.first and message['role'] != 'system' %}<|im_start|>system

|

| 2 |

+

You are a helpful assistant.<|im_end|>

|

| 3 |

+

{% endif %}<|im_start|>{{ message['role'] }}

|

| 4 |

+

{% if message['content'] is string %}{{ message['content'] }}<|im_end|>

|

| 5 |

+

{% else %}{% for content in message['content'] %}{% if content['type'] == 'image' or 'image' in content or 'image_url' in content %}{% set image_count.value = image_count.value + 1 %}{% if add_vision_id %}Picture {{ image_count.value }}: {% endif %}<|vision_start|><|image_pad|><|vision_end|>{% elif content['type'] == 'video' or 'video' in content %}{% set video_count.value = video_count.value + 1 %}{% if add_vision_id %}Video {{ video_count.value }}: {% endif %}<|vision_start|><|video_pad|><|vision_end|>{% elif 'text' in content %}{{ content['text'] }}{% endif %}{% endfor %}<|im_end|>

|

| 6 |

+

{% endif %}{% endfor %}{% if add_generation_prompt %}<|im_start|>assistant

|

| 7 |

+

{% endif %}

|

processor/merges.txt

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

processor/preprocessor_config.json

ADDED

|

@@ -0,0 +1,37 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"crop_size": null,

|

| 3 |

+

"data_format": "channels_first",

|

| 4 |

+

"default_to_square": true,

|

| 5 |

+

"device": null,

|

| 6 |

+

"disable_grouping": null,

|

| 7 |

+

"do_center_crop": null,

|

| 8 |

+

"do_convert_rgb": true,

|

| 9 |

+

"do_normalize": true,

|

| 10 |

+

"do_rescale": true,

|

| 11 |

+

"do_resize": true,

|

| 12 |

+

"image_mean": [

|

| 13 |

+

0.48145466,

|

| 14 |

+

0.4578275,

|

| 15 |

+

0.40821073

|

| 16 |

+

],

|

| 17 |

+

"image_processor_type": "Qwen2VLImageProcessorFast",

|

| 18 |

+

"image_std": [

|

| 19 |

+

0.26862954,

|

| 20 |

+

0.26130258,

|

| 21 |

+

0.27577711

|

| 22 |

+

],

|

| 23 |

+

"input_data_format": null,

|

| 24 |

+

"max_pixels": 12845056,

|

| 25 |

+

"merge_size": 2,

|

| 26 |

+

"min_pixels": 3136,

|

| 27 |

+

"patch_size": 14,

|

| 28 |

+

"processor_class": "Qwen2VLProcessor",

|

| 29 |

+

"resample": 3,

|

| 30 |

+

"rescale_factor": 0.00392156862745098,

|

| 31 |

+

"return_tensors": null,

|

| 32 |

+

"size": {

|

| 33 |

+

"longest_edge": 12845056,

|

| 34 |

+

"shortest_edge": 3136

|

| 35 |

+

},

|

| 36 |

+

"temporal_patch_size": 2

|

| 37 |

+

}

|

processor/special_tokens_map.json

ADDED

|

@@ -0,0 +1,31 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"additional_special_tokens": [

|

| 3 |

+

"<|im_start|>",

|

| 4 |

+

"<|im_end|>",

|

| 5 |

+

"<|object_ref_start|>",

|

| 6 |

+

"<|object_ref_end|>",

|

| 7 |

+

"<|box_start|>",

|

| 8 |

+

"<|box_end|>",

|

| 9 |

+

"<|quad_start|>",

|

| 10 |

+

"<|quad_end|>",

|

| 11 |

+

"<|vision_start|>",

|

| 12 |

+

"<|vision_end|>",

|

| 13 |

+

"<|vision_pad|>",

|

| 14 |

+

"<|image_pad|>",

|

| 15 |

+

"<|video_pad|>"

|

| 16 |

+

],

|

| 17 |

+

"eos_token": {

|

| 18 |

+

"content": "<|im_end|>",

|

| 19 |

+

"lstrip": false,

|

| 20 |

+

"normalized": false,

|

| 21 |

+

"rstrip": false,

|

| 22 |

+

"single_word": false

|

| 23 |

+

},

|

| 24 |

+

"pad_token": {

|

| 25 |

+

"content": "<|endoftext|>",

|

| 26 |

+

"lstrip": false,

|

| 27 |

+

"normalized": false,

|

| 28 |

+

"rstrip": false,

|

| 29 |

+

"single_word": false

|

| 30 |

+

}

|

| 31 |

+

}

|

processor/tokenizer.json

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:9c5ae00e602b8860cbd784ba82a8aa14e8feecec692e7076590d014d7b7fdafa

|

| 3 |

+

size 11421896

|

processor/tokenizer_config.json

ADDED

|

@@ -0,0 +1,208 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"add_bos_token": false,

|

| 3 |

+

"add_prefix_space": false,

|

| 4 |

+

"added_tokens_decoder": {

|

| 5 |

+

"151643": {

|

| 6 |

+

"content": "<|endoftext|>",

|

| 7 |

+

"lstrip": false,

|

| 8 |

+

"normalized": false,

|

| 9 |

+

"rstrip": false,

|

| 10 |

+

"single_word": false,

|

| 11 |

+

"special": true

|

| 12 |

+

},

|

| 13 |

+

"151644": {

|

| 14 |

+

"content": "<|im_start|>",

|

| 15 |

+

"lstrip": false,

|

| 16 |

+

"normalized": false,

|

| 17 |

+

"rstrip": false,

|

| 18 |

+

"single_word": false,

|

| 19 |

+

"special": true

|

| 20 |

+

},

|

| 21 |

+

"151645": {

|

| 22 |

+

"content": "<|im_end|>",

|

| 23 |

+

"lstrip": false,

|

| 24 |

+

"normalized": false,

|

| 25 |

+

"rstrip": false,

|

| 26 |

+

"single_word": false,

|

| 27 |

+

"special": true

|

| 28 |

+

},

|

| 29 |

+

"151646": {

|

| 30 |

+

"content": "<|object_ref_start|>",

|

| 31 |

+

"lstrip": false,

|

| 32 |

+

"normalized": false,

|

| 33 |

+

"rstrip": false,

|

| 34 |

+

"single_word": false,

|

| 35 |

+

"special": true

|

| 36 |

+

},

|

| 37 |

+

"151647": {

|

| 38 |

+

"content": "<|object_ref_end|>",

|

| 39 |

+

"lstrip": false,

|

| 40 |

+

"normalized": false,

|

| 41 |

+

"rstrip": false,

|

| 42 |

+

"single_word": false,

|

| 43 |

+

"special": true

|

| 44 |

+

},

|

| 45 |

+

"151648": {

|

| 46 |

+

"content": "<|box_start|>",

|

| 47 |

+

"lstrip": false,

|

| 48 |

+

"normalized": false,

|

| 49 |

+

"rstrip": false,

|

| 50 |

+

"single_word": false,

|

| 51 |

+

"special": true

|

| 52 |

+

},

|

| 53 |

+

"151649": {

|

| 54 |

+

"content": "<|box_end|>",

|

| 55 |

+

"lstrip": false,

|

| 56 |

+

"normalized": false,

|

| 57 |

+

"rstrip": false,

|

| 58 |

+

"single_word": false,

|

| 59 |

+

"special": true

|

| 60 |

+

},

|

| 61 |

+

"151650": {

|

| 62 |

+

"content": "<|quad_start|>",

|

| 63 |

+

"lstrip": false,

|

| 64 |

+

"normalized": false,

|

| 65 |

+

"rstrip": false,

|

| 66 |

+

"single_word": false,

|

| 67 |

+

"special": true

|

| 68 |

+

},

|

| 69 |

+

"151651": {

|

| 70 |

+

"content": "<|quad_end|>",

|

| 71 |

+

"lstrip": false,

|

| 72 |

+

"normalized": false,

|

| 73 |

+

"rstrip": false,

|

| 74 |

+

"single_word": false,

|

| 75 |

+

"special": true

|

| 76 |

+

},

|

| 77 |

+

"151652": {

|

| 78 |

+

"content": "<|vision_start|>",

|

| 79 |

+

"lstrip": false,

|

| 80 |

+

"normalized": false,

|

| 81 |

+

"rstrip": false,

|

| 82 |

+

"single_word": false,

|

| 83 |

+

"special": true

|

| 84 |

+

},

|

| 85 |

+

"151653": {

|

| 86 |

+

"content": "<|vision_end|>",

|

| 87 |

+

"lstrip": false,

|

| 88 |

+

"normalized": false,

|

| 89 |

+

"rstrip": false,

|

| 90 |

+

"single_word": false,

|

| 91 |

+

"special": true

|

| 92 |

+

},

|

| 93 |

+

"151654": {

|

| 94 |

+

"content": "<|vision_pad|>",

|

| 95 |

+

"lstrip": false,

|

| 96 |

+

"normalized": false,

|

| 97 |

+

"rstrip": false,

|

| 98 |

+

"single_word": false,

|

| 99 |

+

"special": true

|

| 100 |

+

},

|

| 101 |

+

"151655": {

|

| 102 |

+

"content": "<|image_pad|>",

|

| 103 |

+

"lstrip": false,

|

| 104 |

+

"normalized": false,

|

| 105 |

+

"rstrip": false,

|

| 106 |

+

"single_word": false,

|

| 107 |

+

"special": true

|

| 108 |

+

},

|

| 109 |

+

"151656": {

|

| 110 |

+

"content": "<|video_pad|>",

|

| 111 |

+

"lstrip": false,

|

| 112 |

+

"normalized": false,

|

| 113 |

+

"rstrip": false,

|

| 114 |

+

"single_word": false,

|

| 115 |

+

"special": true

|

| 116 |

+

},

|

| 117 |

+

"151657": {

|

| 118 |

+

"content": "<tool_call>",

|

| 119 |

+

"lstrip": false,

|

| 120 |

+

"normalized": false,

|

| 121 |

+

"rstrip": false,

|

| 122 |

+

"single_word": false,

|

| 123 |

+

"special": false

|

| 124 |

+

},

|

| 125 |

+

"151658": {

|

| 126 |

+

"content": "</tool_call>",

|

| 127 |

+

"lstrip": false,

|

| 128 |

+

"normalized": false,

|

| 129 |

+

"rstrip": false,

|

| 130 |

+

"single_word": false,

|

| 131 |

+

"special": false

|

| 132 |

+

},

|

| 133 |

+

"151659": {

|

| 134 |

+

"content": "<|fim_prefix|>",

|

| 135 |

+

"lstrip": false,

|

| 136 |

+

"normalized": false,

|

| 137 |

+

"rstrip": false,

|

| 138 |

+

"single_word": false,

|

| 139 |

+

"special": false

|

| 140 |

+

},

|

| 141 |

+

"151660": {

|

| 142 |

+

"content": "<|fim_middle|>",

|

| 143 |

+

"lstrip": false,

|

| 144 |

+

"normalized": false,

|

| 145 |

+

"rstrip": false,

|

| 146 |

+

"single_word": false,

|

| 147 |

+

"special": false

|

| 148 |

+

},

|

| 149 |

+

"151661": {

|

| 150 |

+

"content": "<|fim_suffix|>",

|

| 151 |

+

"lstrip": false,

|

| 152 |

+

"normalized": false,

|

| 153 |

+

"rstrip": false,

|

| 154 |

+

"single_word": false,

|

| 155 |

+

"special": false

|

| 156 |

+

},

|

| 157 |

+

"151662": {

|

| 158 |

+

"content": "<|fim_pad|>",

|

| 159 |

+

"lstrip": false,

|

| 160 |

+

"normalized": false,

|

| 161 |

+

"rstrip": false,

|

| 162 |

+

"single_word": false,

|

| 163 |

+

"special": false

|

| 164 |

+

},

|

| 165 |

+

"151663": {

|

| 166 |

+

"content": "<|repo_name|>",

|

| 167 |

+

"lstrip": false,

|

| 168 |

+

"normalized": false,

|

| 169 |

+

"rstrip": false,

|

| 170 |

+

"single_word": false,

|

| 171 |

+

"special": false

|

| 172 |

+

},

|

| 173 |

+

"151664": {

|

| 174 |

+

"content": "<|file_sep|>",

|

| 175 |

+

"lstrip": false,

|

| 176 |

+

"normalized": false,

|

| 177 |

+

"rstrip": false,

|

| 178 |

+

"single_word": false,

|

| 179 |

+

"special": false

|

| 180 |

+

}

|

| 181 |

+

},

|

| 182 |

+

"additional_special_tokens": [

|

| 183 |

+

"<|im_start|>",

|

| 184 |

+

"<|im_end|>",

|

| 185 |

+

"<|object_ref_start|>",

|

| 186 |

+

"<|object_ref_end|>",

|

| 187 |

+

"<|box_start|>",

|

| 188 |

+

"<|box_end|>",

|

| 189 |

+

"<|quad_start|>",

|

| 190 |

+

"<|quad_end|>",

|

| 191 |

+

"<|vision_start|>",

|

| 192 |

+

"<|vision_end|>",

|

| 193 |

+

"<|vision_pad|>",

|

| 194 |

+

"<|image_pad|>",

|

| 195 |

+

"<|video_pad|>"

|

| 196 |

+

],

|

| 197 |

+

"bos_token": null,

|

| 198 |

+

"clean_up_tokenization_spaces": false,

|

| 199 |

+

"eos_token": "<|im_end|>",

|

| 200 |

+

"errors": "replace",

|

| 201 |

+

"extra_special_tokens": {},

|

| 202 |

+

"model_max_length": 131072,

|

| 203 |

+

"pad_token": "<|endoftext|>",

|

| 204 |

+

"processor_class": "Qwen2VLProcessor",

|

| 205 |

+

"split_special_tokens": false,

|

| 206 |

+

"tokenizer_class": "Qwen2Tokenizer",

|

| 207 |

+

"unk_token": null

|

| 208 |

+

}

|

processor/video_preprocessor_config.json

ADDED

|

@@ -0,0 +1,44 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"crop_size": null,

|

| 3 |

+

"data_format": "channels_first",

|

| 4 |

+

"default_to_square": true,

|

| 5 |

+

"device": null,

|

| 6 |

+

"do_center_crop": null,

|

| 7 |

+

"do_convert_rgb": true,

|

| 8 |

+

"do_normalize": true,

|

| 9 |

+

"do_pad": null,

|

| 10 |

+

"do_rescale": true,

|

| 11 |

+

"do_resize": true,

|

| 12 |

+

"do_sample_frames": false,

|

| 13 |

+

"fps": null,

|

| 14 |

+

"image_mean": [

|

| 15 |

+

0.48145466,

|

| 16 |

+

0.4578275,

|

| 17 |

+

0.40821073

|

| 18 |

+

],

|

| 19 |

+

"image_std": [

|

| 20 |

+

0.26862954,

|

| 21 |

+

0.26130258,

|

| 22 |

+

0.27577711

|

| 23 |

+

],

|

| 24 |

+

"input_data_format": null,

|

| 25 |

+

"max_frames": 768,

|

| 26 |

+

"max_pixels": 12845056,

|

| 27 |

+

"merge_size": 2,

|

| 28 |

+

"min_frames": 4,

|

| 29 |

+

"min_pixels": 3136,

|

| 30 |

+

"num_frames": null,

|

| 31 |

+

"patch_size": 14,

|

| 32 |

+

"processor_class": "Qwen2VLProcessor",

|

| 33 |

+

"resample": 3,

|

| 34 |

+

"rescale_factor": 0.00392156862745098,

|

| 35 |

+

"return_metadata": false,

|

| 36 |

+

"size": {

|

| 37 |

+

"longest_edge": 12845056,

|

| 38 |

+

"shortest_edge": 3136

|

| 39 |

+

},

|

| 40 |

+

"size_divisor": null,

|

| 41 |

+

"temporal_patch_size": 2,

|

| 42 |

+

"video_metadata": null,

|

| 43 |

+

"video_processor_type": "Qwen2VLVideoProcessor"

|

| 44 |

+

}

|

processor/vocab.json

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

quantization_info.json

ADDED

|

@@ -0,0 +1,6 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"quantization_method": "mixed_precision_nf4",

|

| 3 |

+

"description": "First and last transformer blocks kept at bfloat16, middle layers quantized to NF4",

|

| 4 |

+

"high_precision_layers_count": 30,

|

| 5 |

+

"note": "Based on city96/Qwen-Image-gguf approach for better quality"

|

| 6 |

+

}

|

scheduler/scheduler_config.json

ADDED

|

@@ -0,0 +1,18 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"_class_name": "FlowMatchEulerDiscreteScheduler",

|

| 3 |

+

"_diffusers_version": "0.36.0.dev0",

|

| 4 |

+

"base_image_seq_len": 256,

|

| 5 |

+

"base_shift": 0.5,

|

| 6 |

+

"invert_sigmas": false,

|

| 7 |

+

"max_image_seq_len": 8192,

|

| 8 |

+

"max_shift": 0.9,

|

| 9 |

+

"num_train_timesteps": 1000,

|

| 10 |

+

"shift": 1.0,

|

| 11 |

+

"shift_terminal": 0.02,

|

| 12 |

+

"stochastic_sampling": false,

|

| 13 |

+

"time_shift_type": "exponential",

|

| 14 |

+

"use_beta_sigmas": false,

|

| 15 |

+

"use_dynamic_shifting": true,

|

| 16 |

+

"use_exponential_sigmas": false,

|

| 17 |

+

"use_karras_sigmas": false

|

| 18 |

+

}

|

text_encoder/config.json

ADDED

|

@@ -0,0 +1,181 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"architectures": [

|

| 3 |

+

"Qwen2_5_VLForConditionalGeneration"

|

| 4 |

+

],

|

| 5 |

+

"attention_dropout": 0.0,

|

| 6 |

+

"bos_token_id": 151643,

|

| 7 |

+

"dtype": "bfloat16",

|

| 8 |

+

"eos_token_id": 151645,

|

| 9 |

+

"hidden_act": "silu",

|

| 10 |

+

"hidden_size": 3584,

|

| 11 |

+

"image_token_id": 151655,

|

| 12 |

+

"initializer_range": 0.02,

|

| 13 |

+

"intermediate_size": 18944,

|

| 14 |

+

"max_position_embeddings": 128000,

|

| 15 |

+

"max_window_layers": 28,

|

| 16 |

+

"model_type": "qwen2_5_vl",

|

| 17 |

+

"num_attention_heads": 28,

|

| 18 |

+

"num_hidden_layers": 28,

|

| 19 |

+

"num_key_value_heads": 4,

|

| 20 |

+

"quantization_config": {

|

| 21 |

+

"_load_in_4bit": true,

|

| 22 |

+

"_load_in_8bit": false,

|

| 23 |

+

"bnb_4bit_compute_dtype": "bfloat16",

|

| 24 |

+

"bnb_4bit_quant_storage": "uint8",

|

| 25 |

+

"bnb_4bit_quant_type": "nf4",

|

| 26 |

+

"bnb_4bit_use_double_quant": true,

|

| 27 |

+

"llm_int8_enable_fp32_cpu_offload": false,

|

| 28 |

+

"llm_int8_has_fp16_weight": false,

|

| 29 |

+

"llm_int8_skip_modules": [

|

| 30 |

+

"transformer_blocks.0.img_mod.1",

|

| 31 |

+

"transformer_blocks.0.attn.to_q",

|

| 32 |

+

"transformer_blocks.0.attn.to_k",

|

| 33 |

+

"transformer_blocks.0.attn.to_v",

|

| 34 |

+

"transformer_blocks.0.attn.add_k_proj",

|

| 35 |

+

"transformer_blocks.0.attn.add_v_proj",

|

| 36 |

+

"transformer_blocks.0.attn.add_q_proj",

|

| 37 |

+

"transformer_blocks.0.attn.to_out.0",

|

| 38 |

+

"transformer_blocks.0.attn.to_add_out",

|

| 39 |

+

"transformer_blocks.0.img_mlp.net.0.proj",

|

| 40 |

+

"transformer_blocks.0.img_mlp.net.2",

|

| 41 |

+

"transformer_blocks.0.txt_mod.1",

|

| 42 |

+

"transformer_blocks.0.txt_mlp.net.0.proj",

|

| 43 |

+

"transformer_blocks.0.txt_mlp.net.2",

|

| 44 |

+

"transformer_blocks.59.img_mod.1",

|

| 45 |

+

"transformer_blocks.59.attn.to_q",

|

| 46 |

+

"transformer_blocks.59.attn.to_k",

|

| 47 |

+

"transformer_blocks.59.attn.to_v",

|

| 48 |

+

"transformer_blocks.59.attn.add_k_proj",

|

| 49 |

+

"transformer_blocks.59.attn.add_v_proj",

|

| 50 |

+

"transformer_blocks.59.attn.add_q_proj",

|

| 51 |

+

"transformer_blocks.59.attn.to_out.0",

|

| 52 |

+

"transformer_blocks.59.attn.to_add_out",

|

| 53 |

+

"transformer_blocks.59.img_mlp.net.0.proj",

|

| 54 |

+

"transformer_blocks.59.img_mlp.net.2",

|

| 55 |

+

"transformer_blocks.59.txt_mod.1",

|

| 56 |

+

"transformer_blocks.59.txt_mlp.net.0.proj",

|

| 57 |

+

"transformer_blocks.59.txt_mlp.net.2",

|

| 58 |

+

"norm_out.linear",

|

| 59 |

+

"proj_out"

|

| 60 |

+

],

|

| 61 |

+

"llm_int8_threshold": 6.0,

|

| 62 |

+

"load_in_4bit": true,

|

| 63 |

+

"load_in_8bit": false,

|

| 64 |

+

"quant_method": "bitsandbytes"

|

| 65 |

+

},

|

| 66 |

+

"rms_norm_eps": 1e-06,

|

| 67 |

+

"rope_scaling": {

|

| 68 |

+

"mrope_section": [

|

| 69 |

+

16,

|

| 70 |

+

24,

|

| 71 |

+

24

|

| 72 |

+

],

|

| 73 |

+

"rope_type": "default",

|

| 74 |

+

"type": "default"

|

| 75 |

+

},

|

| 76 |

+

"rope_theta": 1000000.0,

|

| 77 |

+

"sliding_window": 32768,

|

| 78 |

+

"text_config": {

|

| 79 |

+

"architectures": [

|

| 80 |

+

"Qwen2_5_VLForConditionalGeneration"

|

| 81 |

+

],

|

| 82 |

+

"attention_dropout": 0.0,

|

| 83 |

+

"bos_token_id": 151643,

|

| 84 |

+

"dtype": "bfloat16",

|

| 85 |

+

"eos_token_id": 151645,

|

| 86 |

+

"hidden_act": "silu",

|

| 87 |

+

"hidden_size": 3584,

|

| 88 |

+

"image_token_id": null,

|

| 89 |

+

"initializer_range": 0.02,

|

| 90 |

+

"intermediate_size": 18944,

|

| 91 |

+

"layer_types": [

|

| 92 |

+

"full_attention",

|

| 93 |

+

"full_attention",

|

| 94 |

+

"full_attention",

|

| 95 |

+

"full_attention",

|

| 96 |

+

"full_attention",

|

| 97 |

+

"full_attention",

|

| 98 |

+

"full_attention",

|

| 99 |

+

"full_attention",

|

| 100 |

+

"full_attention",

|

| 101 |

+

"full_attention",

|

| 102 |

+

"full_attention",

|

| 103 |

+

"full_attention",

|

| 104 |

+

"full_attention",

|

| 105 |

+

"full_attention",

|

| 106 |

+

"full_attention",

|

| 107 |

+

"full_attention",

|

| 108 |

+

"full_attention",

|

| 109 |

+

"full_attention",

|

| 110 |

+

"full_attention",

|

| 111 |

+

"full_attention",

|

| 112 |

+

"full_attention",

|

| 113 |

+

"full_attention",

|

| 114 |

+

"full_attention",

|

| 115 |

+

"full_attention",

|

| 116 |

+

"full_attention",

|

| 117 |

+

"full_attention",

|

| 118 |

+

"full_attention",

|

| 119 |

+

"full_attention"

|

| 120 |

+

],

|

| 121 |

+

"max_position_embeddings": 128000,

|

| 122 |

+

"max_window_layers": 28,

|

| 123 |

+

"model_type": "qwen2_5_vl_text",

|

| 124 |

+

"num_attention_heads": 28,

|

| 125 |

+

"num_hidden_layers": 28,

|

| 126 |

+

"num_key_value_heads": 4,

|

| 127 |

+

"rms_norm_eps": 1e-06,

|

| 128 |

+

"rope_scaling": {

|

| 129 |

+

"mrope_section": [

|

| 130 |

+

16,

|

| 131 |

+

24,

|

| 132 |

+

24

|

| 133 |

+

],

|

| 134 |

+

"rope_type": "default",

|

| 135 |

+

"type": "default"

|

| 136 |

+

},

|

| 137 |

+

"rope_theta": 1000000.0,

|

| 138 |

+

"sliding_window": null,

|

| 139 |

+

"use_cache": true,

|

| 140 |

+

"use_sliding_window": false,

|

| 141 |

+

"video_token_id": null,

|

| 142 |

+

"vision_end_token_id": 151653,

|

| 143 |

+

"vision_start_token_id": 151652,

|

| 144 |

+

"vision_token_id": 151654,

|

| 145 |

+

"vocab_size": 152064

|

| 146 |

+

},

|

| 147 |

+

"tie_word_embeddings": false,

|

| 148 |

+

"transformers_version": "4.56.2",

|

| 149 |

+

"use_cache": true,

|

| 150 |

+

"use_sliding_window": false,

|

| 151 |

+

"video_token_id": 151656,

|

| 152 |

+

"vision_config": {

|

| 153 |

+

"depth": 32,

|

| 154 |

+

"dtype": "bfloat16",

|

| 155 |

+

"fullatt_block_indexes": [

|

| 156 |

+

7,

|

| 157 |

+

15,

|

| 158 |

+

23,

|

| 159 |

+

31

|

| 160 |

+

],

|

| 161 |

+

"hidden_act": "silu",

|

| 162 |

+

"hidden_size": 1280,

|

| 163 |

+

"in_channels": 3,

|

| 164 |

+

"in_chans": 3,

|

| 165 |

+

"initializer_range": 0.02,

|

| 166 |

+

"intermediate_size": 3420,

|

| 167 |

+

"model_type": "qwen2_5_vl",

|

| 168 |

+

"num_heads": 16,

|

| 169 |

+

"out_hidden_size": 3584,

|

| 170 |

+

"patch_size": 14,

|

| 171 |

+

"spatial_merge_size": 2,

|

| 172 |

+

"spatial_patch_size": 14,

|

| 173 |

+

"temporal_patch_size": 2,

|

| 174 |

+

"tokens_per_second": 2,

|

| 175 |

+

"window_size": 112

|

| 176 |

+

},

|

| 177 |

+

"vision_end_token_id": 151653,

|

| 178 |

+

"vision_start_token_id": 151652,

|

| 179 |

+

"vision_token_id": 151654,

|

| 180 |

+

"vocab_size": 152064

|

| 181 |

+

}

|

text_encoder/generation_config.json

ADDED

|

@@ -0,0 +1,14 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"bos_token_id": 151643,

|

| 3 |

+

"do_sample": true,

|

| 4 |

+

"eos_token_id": [

|

| 5 |

+

151645,

|

| 6 |

+

151643

|

| 7 |

+

],

|

| 8 |

+

"pad_token_id": 151643,

|

| 9 |

+

"repetition_penalty": 1.05,

|

| 10 |

+

"temperature": 0.1,

|

| 11 |

+

"top_k": 1,

|

| 12 |

+

"top_p": 0.001,

|

| 13 |

+

"transformers_version": "4.56.2"

|

| 14 |

+

}

|

text_encoder/model-00001-of-00002.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:1905aae08b7a148c6f2a69939fe196c314f7a75652934e7b85fe222976a28663

|

| 3 |

+

size 4809612598

|

text_encoder/model-00002-of-00002.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:2b727105e5dbd38583aee6a600cad89d2c911ed6592b728702fd4bb60f108704

|

| 3 |

+

size 281149198

|

text_encoder/model.safetensors.index.json

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

tokenizer/added_tokens.json

ADDED

|

@@ -0,0 +1,24 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"</tool_call>": 151658,

|

| 3 |

+

"<tool_call>": 151657,

|

| 4 |

+

"<|box_end|>": 151649,

|

| 5 |

+

"<|box_start|>": 151648,

|

| 6 |

+

"<|endoftext|>": 151643,

|

| 7 |

+

"<|file_sep|>": 151664,

|

| 8 |

+

"<|fim_middle|>": 151660,

|

| 9 |

+

"<|fim_pad|>": 151662,

|

| 10 |

+

"<|fim_prefix|>": 151659,

|

| 11 |

+

"<|fim_suffix|>": 151661,

|

| 12 |

+

"<|im_end|>": 151645,

|

| 13 |

+

"<|im_start|>": 151644,

|

| 14 |

+

"<|image_pad|>": 151655,

|

| 15 |

+

"<|object_ref_end|>": 151647,

|

| 16 |

+

"<|object_ref_start|>": 151646,

|

| 17 |

+

"<|quad_end|>": 151651,

|

| 18 |

+

"<|quad_start|>": 151650,

|

| 19 |

+

"<|repo_name|>": 151663,

|

| 20 |

+

"<|video_pad|>": 151656,

|

| 21 |

+

"<|vision_end|>": 151653,

|

| 22 |

+

"<|vision_pad|>": 151654,

|

| 23 |

+

"<|vision_start|>": 151652

|

| 24 |

+

}

|

tokenizer/chat_template.jinja

ADDED

|

@@ -0,0 +1,54 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{%- if tools %}

|

| 2 |

+

{{- '<|im_start|>system\n' }}

|

| 3 |

+

{%- if messages[0]['role'] == 'system' %}

|

| 4 |

+

{{- messages[0]['content'] }}

|

| 5 |

+

{%- else %}

|

| 6 |

+

{{- 'You are a helpful assistant.' }}

|

| 7 |

+

{%- endif %}

|

| 8 |

+

{{- "\n\n# Tools\n\nYou may call one or more functions to assist with the user query.\n\nYou are provided with function signatures within <tools></tools> XML tags:\n<tools>" }}

|

| 9 |

+

{%- for tool in tools %}

|

| 10 |

+

{{- "\n" }}

|

| 11 |

+

{{- tool | tojson }}

|

| 12 |

+

{%- endfor %}

|

| 13 |

+

{{- "\n</tools>\n\nFor each function call, return a json object with function name and arguments within <tool_call></tool_call> XML tags:\n<tool_call>\n{\"name\": <function-name>, \"arguments\": <args-json-object>}\n</tool_call><|im_end|>\n" }}

|

| 14 |

+

{%- else %}

|

| 15 |

+

{%- if messages[0]['role'] == 'system' %}

|

| 16 |

+

{{- '<|im_start|>system\n' + messages[0]['content'] + '<|im_end|>\n' }}

|

| 17 |

+

{%- else %}

|

| 18 |

+

{{- '<|im_start|>system\nYou are a helpful assistant.<|im_end|>\n' }}

|

| 19 |

+

{%- endif %}

|

| 20 |

+

{%- endif %}

|

| 21 |

+

{%- for message in messages %}

|

| 22 |

+

{%- if (message.role == "user") or (message.role == "system" and not loop.first) or (message.role == "assistant" and not message.tool_calls) %}

|

| 23 |

+

{{- '<|im_start|>' + message.role + '\n' + message.content + '<|im_end|>' + '\n' }}

|

| 24 |

+

{%- elif message.role == "assistant" %}

|

| 25 |

+

{{- '<|im_start|>' + message.role }}

|

| 26 |

+

{%- if message.content %}

|

| 27 |

+

{{- '\n' + message.content }}

|

| 28 |

+

{%- endif %}